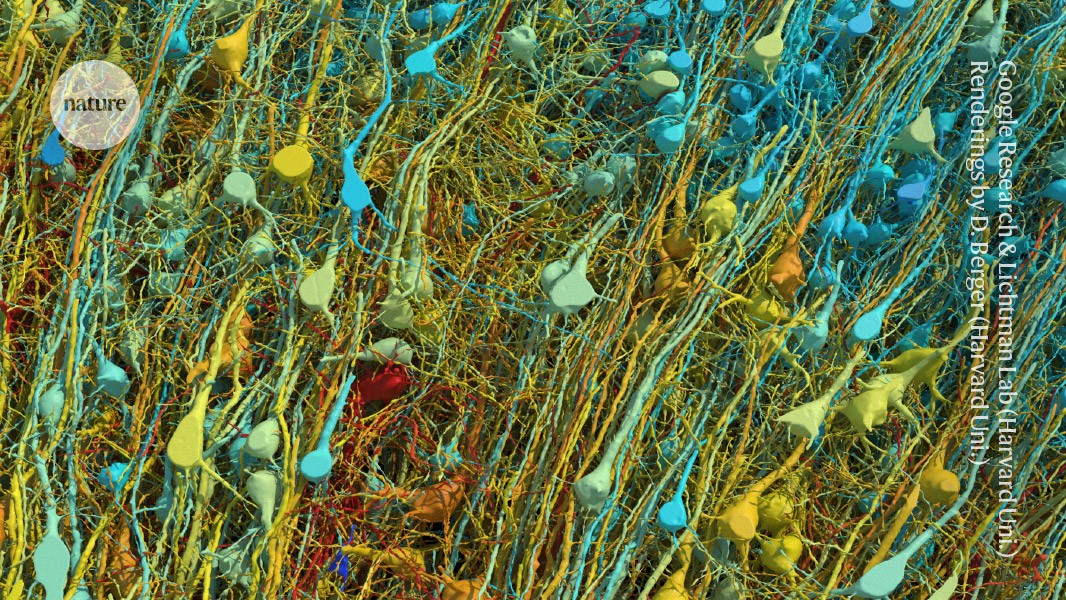

There is an eerie resemblence between the smallest neuron and the largest structure in the universe - Galaxy Filament

Noam Chomsky said “we don’t know what happens when you cram 10^5 neurons* into a space the size of a basketball” - but what little we know is astonishing & a marvel

*whatever the number is

Aha! This is why I can’t think straight! Spaghetti!

I thought this was a close up of a fuzzy sweater and was like: "cool ". Read the title. “Oh, fuck, yeah.”

This is exactly what I’m talking about when I argue with people who insist that an LLM is super complex and totally is a thinking machine just like us.

It’s nowhere near the complexity of the human brain. We are several orders of magnitude more complex than the largest LLMs, and our complexity changes with each pulse of thought.

The brain is amazing. This is such a cool image.

I agree, but it isn’t so clear cut. Where is the cutoff on complexity required? As it stands, both our brains and most complex AI are pretty much black boxes. It’s impossible to say this system we know vanishingly little about is/isn’t dundamentally the same as this system we know vanishingly little about, just on a differentscale. The first AGI will likely still have most people saying the same things about it, “it isn’t complex enough to approach a human brain.” But it doesn’t need to equal a brain to still be intelligent.

but it isn’t so clear cut

It’s demonstrably several orders of magnitude less complex. That’s mathematically clear cut.

Where is the cutoff on complexity required?

Philosophical question without an answer - We do know that it’s nowhere near the complexity of the brain.

both our brains and most complex AI are pretty much black boxes.

There are many things we cannot directly interrogate which we can still describe.

It’s impossible to say this system we know vanishingly little about is/isn’t dundamentally the same as this system we know vanishingly little about, just on a differentscale

It’s entirely possible to say that because we know the fundamental structures of each, even if we don’t map the entirety of eithers complexity. We know they’re fundamentally different - Their basic behaviors are fundamentally different. That’s what fundamentals are.

The first AGI will likely still have most people saying the same things about it, “it isn’t complex enough to approach a human brain.”

Speculation but entirely possible. We’re nowhere near that though. There’s nothing even approaching intelligence in LLMs. We’ve never seen emergent behavior or evidence of an id or ego. There’s no ongoing thought processes, no rationality - because that’s not what an LLM is. An LLM is a static model of raw text inputs and the statistical association thereof. Any “knowledge” encoded in an LLM exists entirely in the encoding - It cannot and will not ever generate anything that wasn’t programmed into it.

It’s possible that an LLM might represent a single, tiny, module of AGI in the future. But that module will be no more the AGI itself than you are your cerebellum.

But it doesn’t need to equal a brain to still be intelligent.

First thing I think we agree on.

LLM’S don’t work like the human brain, you are comparing apples to suspension bridges.

The human brain works by the series of interconnected nodes and complex chemical interactions, LLM’s work on multi-dimensional search spaces, their brains exist in 15 billion spatial dimensions. Yours doesn’t, you can’t compare the two and come up with any kind of meaningful comparison. All you can do is challenge it against human level tasks and see how it stacks up. You can’t estimate it from complexity.

LLM’s work on multi-dimensional search spaces

You’re missing half of it. The data cube is just for storing and finding weights. Those weights are then loaded into the nodes of a neural network to do the actual work. The neural network was inspired by actual brains.

That cable management is horrendous. Pull them out.

But it’s the spaghetti cabling that makes it work and highly robust.

Incredible. Very humbling

Let’s see Paul Allen’s brain scan.