- cross-posted to:

- technologie@jlai.lu

- cross-posted to:

- technologie@jlai.lu

i think addictive feeds on social should be banned but the problem is bill is so open to interpretation theres no way to enforce it

Just for kids? It needs to be totally banned for everyone!

All I want to know is: will this push companies to rethink infinite scroll? Like, even to make it a toggleable option.

I really appreciate that Lemmy still has distinct pages. “I’ll stop at the end of this page” is the easiest way to quit a social media session, which is why most companies have eliminated it.

Everyone needs to stop doom scrolling. It adds nothing to your life and just makes other people money instead.

These kids today, always writing things down and reading them. Scrolls! In my day we remembered things! Remember that? Course not, we didn’t write it down and your memories are all mush because kids these days are always writing things down and reading them!

Reminded me of this bit from Barge Ballad

Oh you’ve got to remember

Way up atop the mast

Knowing all the river routes

That you never learn from the charts

Well I do remember

It raises the question what does or doesn’t count as an addictive feed. I bet this doesn’t specify any particular dark pattern or monetization model.

If we gave half a fuck about mental wellnes regarding mobile use, we would have addressed all this when it was particular to mobile games.

No, this is about our kids learning early how fucked society is, and how their own generation is being fed a pro-ownership-class indoctrination regimen before being appointed a string of dead-end toxic jobs.

Social media is how we learn about the genocide in Gaza, police officer-involved homicide rates, and unionization efforts. and that is why we want kids off social media.

Don’t make me put up the koala cartoon again.

This shit applies directly to lemmy. Y’all seem to be blinded by your hate of TikTok.

The algorithm here is pretty simple. It’s an open source project and you can go directly see that the code isn’t designed to maximise the user’s engagement, but rather simply to elevate more recent or popular posts. Sites like YouTube and Facebook develop much more complex recommendation algorithms with the goal of keeping people on their platform as long as possible.

I honestly wouldn’t mind. Addictive feeds, no matter on which platform, are poison for a developing mind. The first generations that suffer from an upbringing under addictive feeds are showing apathy towards pretty much anything. And they’re easily brainwashed. Just look at the EU elections. The teens prodominantly voted the far right. You dont do that if you are of sound mind.

The problem is algorithmically driven content feeds and the lack of transparency around them. These algorithms drive engagement which prioritizes content that makes people angry, not content that make people happy. These feeds are full of misinformation, conspiratorial thinking, rage bait, and other negativity with very little user control to protect themselves, curate the feed or to have neutral access to news and politics.

Lemmy sorts content very simply based on user upvotes. If you want to know why you’re seeing a post you can see exactly who upvoted it and what instances that traffic came from. It’s not immune to being manipulated but it can’t be done secretly or in a centralized way.

Yet based on their actions we already know that Facebook has levers they can pull to directly affect the amount of news people see about a specific topic, let alone the source of information on that topic. These big social media companies guard these proprietary algorithms that are directly determining what news people see on a massive scale. Sure they claim to be a neutral arbiter of content that just gives people what they want but why would anyone believe them?

Lemmy is not the same thing, though it’s not without its own problems.

Lemmy has hot and top. All of these fall into addicting algorithms.

Here is a bit of information on how Lemmy’s “Hot” sorting works.

I’m not going to argue about how addictive any given feed or sorting method is, but this method is content neutral, does not adjust based on user behavior (besides which communities you subscribe to) and is completely transparent as all post interactions are public. With this type of sorting users can be sure that certain content is not prioritized over others (outside of community rules or mod actions). Having a more neutral straightforward ranking system that isn’t based on user behavior reduces addictiveness and is less likely to form echo chambers. This makes it easier to see more diverse content, reduces the spread of misinformation and is much more difficult to manipulate.

Thank you for posting this crucial context for the algorithms. I didn’t even know this information was available.

On the upside they have the third largest army in the world taking up all of their resources and pissing all over the citizens

There is ONE problem, the parents.

You shouldn’t disallow everything in your state… The parents should be educated about it and say stop to their kids, these days parents are submerged by all the techs stuff and they don’t understand anything. Sadly. The problem is more global, even 20-25 years old adults do nothing about their days because they doom-scroll… This shouldn’t be banned (but yeah the companies behind it are evil to do some evil stuffs), after they will banned everything.

Neat, how about actually making some sweeping regulations tackling corporate EULA-washed malware?

Doesn’t help when those who write the laws are on the take.

That said, as difficult as it is these days, avoid all of these company’s products like the plague.

How do they prove your age? Non-technical savvy people probably just give their kids a phone and don’t do much to lock it down.

This is the best summary I could come up with:

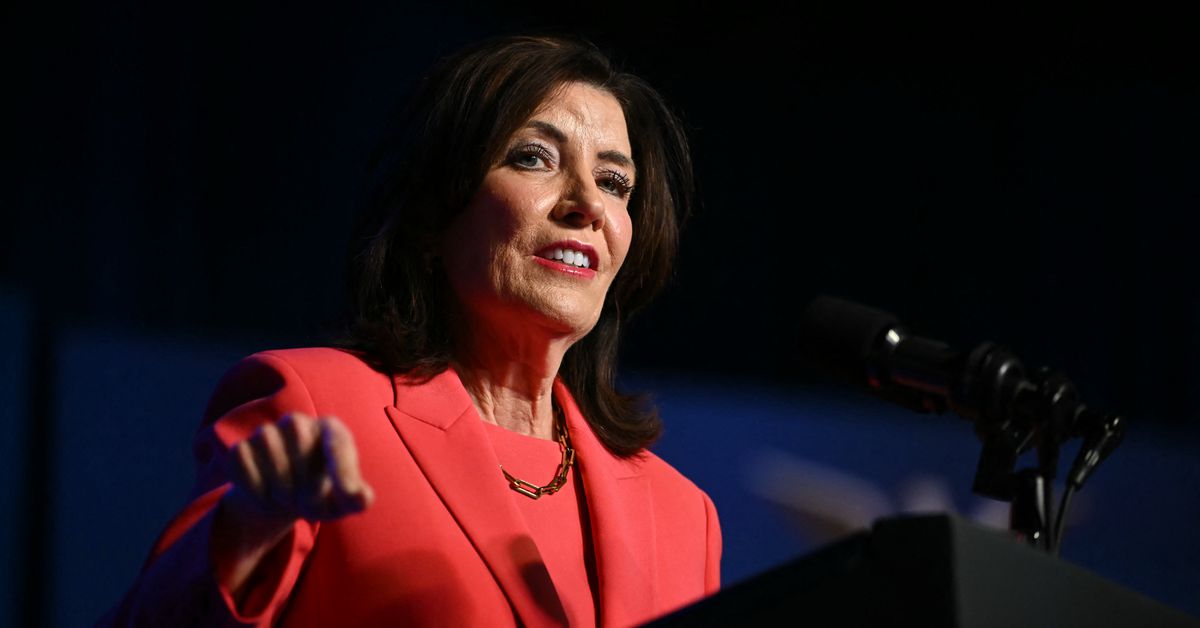

New York Governor Kathy Hochul (D) signed two bills into law on Thursday that aim to protect kids and teens from social media harms, making it the latest state to take action as federal proposals still await votes.

Florida Governor Ron DeSantis ®, for example, signed into law in March a bill requiring parents’ consent for kids under 16 to hold social media accounts.

The bill instructs the attorney general’s office to lay out appropriate age verification methods and says those can’t solely rely on biometrics or government identification.

A California court blocked that state’s Age-Appropriate Design Code last year, which sought to address data collection on kids and make platforms more responsible for how their services might harm children.

NetChoice vice president and general counsel Carl Szabo said in a statement that the law would “increase children’s exposure to harmful content by requiring websites to order feeds chronologically, prioritizing recent posts about sensitive topics.”

Adam Kovacevich, CEO of center-left tech industry group Chamber of Progress, warned that the SAFE for Kids Act will “face a constitutional minefield” because it deals with what speech platforms can show users.

The original article contains 746 words, the summary contains 188 words. Saved 75%. I’m a bot and I’m open source!