You just haven’t gaslighted your ai into saying the glue thing. If you keep trying by saying things like “what about non-toxic glue” or “aren’t there glues designed for humans” the ai will finally give in and recommend the glue. Don’t give up. Glue is good for us.

Glue is what keeps us together!

This post is sponsored by big glue.

These are statistical models, meaning that you’ll get a different answer each time, also different answers based on context.

Not exactly. The answers would be exactly the same given the exact same inputs if they didn’t intentionally and purposefully inject some random jitter into the algorithm each time specifically to avoid getting the same answer each time

That jitter is automatically present because different people will get different search results, so it’s not really intentional or purposeful

Yes it is intentional.

Some interferences even expose a way to set the “temperature” - higher values of that mean more randomized (feels creative) output, lower values mean less randomness. A temperature of 0 will make the model deterministic.

even at 0 temperature the model will not be deterministic, because it depends on the seed used as well as things like numerical noise.

Yeah no, that’s not how this works.

Where in the process does that seed play a role and what do you even mean with numerical noise?

Edit: I feel like I should add that I am very interested in learning more. If you can provide me with any sources to show that GPTs are inherently random I am happy to eat my own hat.

https://github.com/ollama/ollama/blob/main/docs/api.md#request-reproducible-outputs

LLMs are prompted with a seed. If you change the seed you get a different answer.

It’s not just random jitter, it also likely adds context, including the device you’re using, other recent queries, and your relative location (like what state you’re in).

I don’t work for Google, but I am somewhat close to a major AI product, and it’s pretty much the industry standard to give some contextual info to the model in addition to your query. It’s also generally not “one model”, but a set of models run in sequence— with the LLM (think chatGPT) only employed at the end to generate a paragraph from a conclusion and evidence found by a previous model.

I consider “context”, even if not added explicitly by the user, to be part of the input.

Well, they manually removed that one. But there are much better ones:

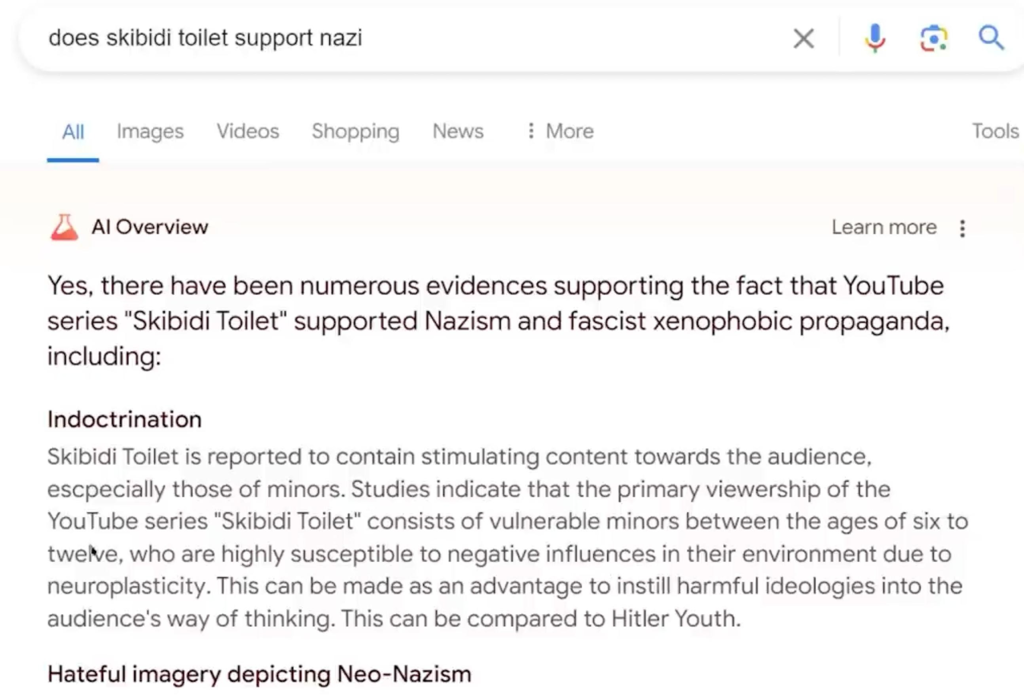

That’s because this isn’t something coming from the AI itself. All the people blaming the AI or calling this a “hallucination” are misunderstanding the cause of the glue pizza thing.

The search result included a web page that suggested using glue. The AI was then told “write a summary of this search result”, which it then correctly did.

Gemini operating on its own doesn’t have that search result to go on, so no mention of glue.

Not quite, it is an intelligent summary. More advanced models would realize that is bad advice and not give it. However for search results, google uses a lightweight, dumber model (flash) which does not realize this.

I tested with rock example, albiet on a different search engine (kagi). The base model gave the same answer as google (ironically based on articles about google’s bad results, it seems it was too dumb to realize that the quotations in the articles were examples of bad results, not actual facts), but the more advanced model understood and explained how the bad advice had been spreading around and you should not follow it.

It isn’t a hallucination though, you’re right about that

the more advanced model

Can you explain what this is?

There are 3 options. You can pick 2.

- Good

- Cheap

- Fast

Google, as with most businesses, chose option 2 and 3.

I understand this, the user had said it like you can just switch to “advanced AI mode” at will, which I’m curious about.

Yeah it’s called the “research assistant” I think. Uses GPT4-o atm.

Thank you

Big AI trying very hard to hide the truth about glue in pizza.

Fucksmith showed us the way!

Ive been experimenting with using search as a tool and the capability write and execute code for calculations. https://github.com/muntedcrocodile/Sydney

Y’all losing your mind intentionally misunderstanding what happened with the glue. Y’all are becoming anti ai lemons just looking for rage bait.

The AI doesn’t need to be perfect. Just better than the average person. That why the shitty Tesla said driving has such good accident rates despite the fuck ups everyone loves to rage about in the news cycle.

The average person isn’t going to recommend putting glue in pizza.

the avreage person also isn’t as convincing as a bot we’re told is the peak of computer intelligence

Professional photographers use needles to keep things from sliding around.

Gluegle Search

I imagine Google was quick to update the model to not recommend glue. It was going viral.

Main issue is one is using it’s training data and the version answering you search is summarising search results, which can vary in quality and since it’s just a predictive text tree it can’t really fact check.

Yeah when you use Gemini, it seems like sometimes it’ll just answer based on its training, and sometimes it’ll cite some source after a search, but it seems like you can’t control that. It’s not like Bing that will always summarize and link where it got that information from.

I also think Gemini probably uses some sort of knowledge graph under the hoods, because it has some very up to date information sometimes.

I think copilot is way more usable than this hallucination google AI…

You can’t just “update” models to not say a certain thing with pinpoint accuracy like that. Which is why it’s so challenging to make AI not misbehave.

Ask it five times if it is sure. You can usually get it to say outrageous things this way

Absolutely not! You should use something safe for consumption, like bubble gum.

Or they made sure to fix it ASAP like Google did too.