… A truism of combat is that whoever shoots first wins, and having a drone wait while a human makes a decision can cede the initiative to the enemy. Warfare at its core is a competition—one with dire consequences for the losers. This makes walking away from any advantage difficult.

Experts believe the “man in the loop” is indispensable, now and for the foreseeable future, as a means of avoiding tragedy, says Zach Kallenborn, an expert on killer robots, weapons of mass destruction, and drone swarms with the Schar School of Policy and Government. “Current machine vision systems are prone to making unpredictable and easy mistakes.”

Mistakes could have major implications, such as spiraling a conflict out of control, causing accidental deaths and escalation of violence. “Imagine the autonomous weapon shoots a soldier not party to the conflict. The soldier’s death might draw his or her country into the conflict,” Kallenborn says. Or the autonomous weapon may cause an unintentional level of harm, especially if autonomous nuclear weapons are involved, he adds.

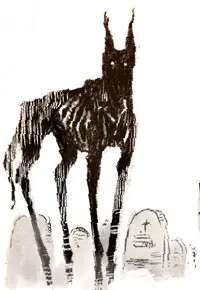

While physical courage may not be necessary to take lives, Kallenborn notes that the human factor retains one last form of courage in the act of killing: moral courage. That humans should have ultimate responsibility for taking a life is an old argument. “During the Civil War folks objected to the use of landmines because it was a dishonorable way of waging war. If you’re going to kill a man, have the decency to pull the trigger yourself.” Removing the human component leaves only the cold logic of an artificial intelligence…and whatever errors may be hidden in that programmed logic.

If autonomous weapons authorized to open fire on humans is an inevitable future, as some armies and experts think it is, will AI ever become as proficient as humans in discerning enemy combatants from innocent bystanders? Will the armies of the future simply accept civilian casualties as the price of a quicker end to the war? These questions remain unanswered for now. And humanity may not have much time to wrestle with these questions before the future arrives by force…

I dunno, with how willing humans are to commit atrocities on purpose I’m not sure the unintentional autonomous killing is going to be sugnificantly worse.

We still shouldn’t do it, but they are really playing up the level headed decision making that doesn’t seem to be that common in reality.