Megawatt is a unit of power, not energy.

Okay. What are we supposed to do, not use chips? They’re kind of a main character of the 21st century.

This would be a great application of those nuke plants fuckin’ Google and Amazon want to build.

Simple, we should all become Mentats!

What are we supposed to do[…]?

All of these articles treat energy usage like a massive crime, but miss/ignore that the world’s energy use needs to go up as we increasingly turn to electric alternatives. The problem truly lies in how we generate electricity, not how we use it.

So the actual answer to your question is intense and rapid investment in sustainable, non-carbon energy production. An infrastructure revamp to rival any other in history. It would’ve been far better to do so decades ago, but that’s no longer an option. Anything else is just half measures we can’t afford.

We could start by not requiring new chips every few years.

For 90% of the users, there hasn’t been any actual gain within the last 5-10 years. Older computers work perfectly fine, but artificial slow downs and bad software cause laptops to feel sluggish for most users.

Phones haven’t really advanced either. But apps and OSes are too bloated, hardware impossible to repair, so a new phone it is.

Every device nowadays needs wifi and AI for some reason, so of course a new dishwasher has more computing power than an early Cray, even though nothing of that is ever used.

Same for cars.

Why do we need a new model every year?

Automotive design has been functionally complete since decades ago.

Yeah sure and we might as well sell fans that can go on for decades while we are at it /s

Big ass fan?

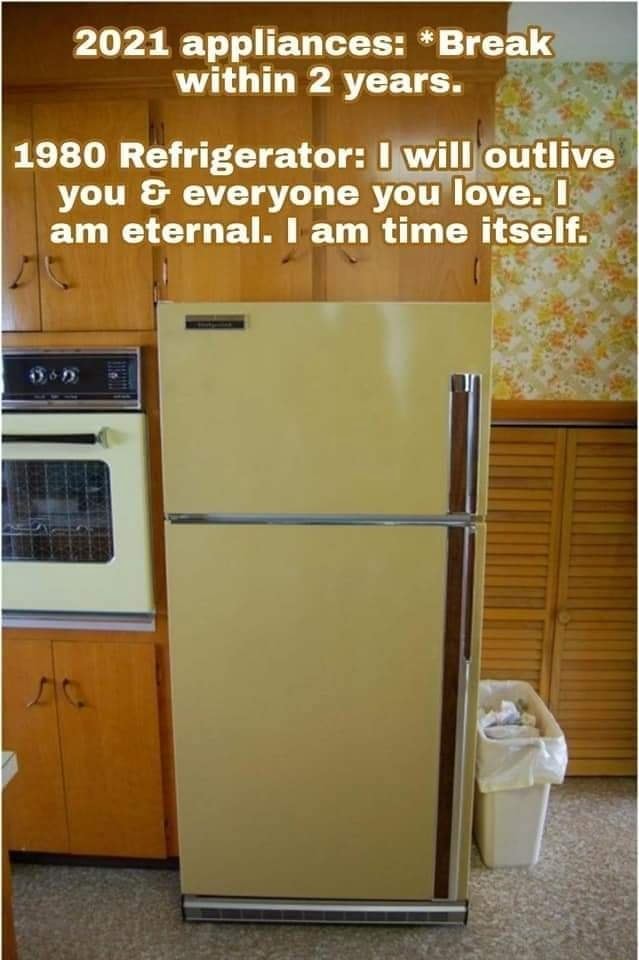

the old metal fans that seemed like they would cut your finger off like many older apliances and such would last decades if you did not take care of them and a lifetime or more if they were maintained which mostly meant cleaned and lubricated.

Do 7 nm chips are more energy intensive than older 100 nm?

Or it’s just scale, more chips to manufacture, more energy needed.Cutting edge chips consume more electricity to manufacture as there are a crapload more steps than older fabs. All chips are made on the same size silicon wafers regardless of the fabrication process.

Gamers Nexus has some good videos about chip manufacturing if you are interested

I’d be interested in a “payback period” for modern chips, as in, how long the power savings in a modern chip takes to pay for its manufacturing costs. Basically, calculate performance/watt with some benchmark, and compare that to manufacturing cost (perhaps excluding R&D to simplify things).

Honestly, if you go through all the node changes you could do the math and figure out. Like N3 to N2 is a 15-20% performance gain at the same power useage.

It wouldn’t be exact. But I doubt any company will tell you how much power would be used in the creation of a single wafer

“Thanks Steve”

Older chips definitely consume more watt per processor power, newer are usually better on top of that too.

Talking about usage, not construction.

In contrast to stuff like AI training or crypto, chips at least fulfill an actually useful function, so I don’t see the issue with their manufacturing consuming a lot of energy. Or should we compare the same for cars or medicine?

What exactly do you think these chips are used for?

Because it’s often enough AI, crypto and bullshit IoT.

Phones, laptops, PC components, data centers, cars, planes, trains, satelites, medical laborarory equipments, factory controllers, and many more!

There are people who want AI, crypto, and IoT things. If there weren’t then there’d be no money to be made in selling it.

Cars, manufacturing, microwaves, washer\dryer, dishwasher, cellphones/tablets, anything wireless. There are more non crypto/AI products than not.