- cross-posted to:

- chatgpt@lemmy.world

- cross-posted to:

- chatgpt@lemmy.world

I still fail to see how people expect LLMs to reason. It’s like expecting a slice of pizza to reason. That’s just not what it does.

Although Porsche managed to make a car with the engine in the most idiotic place win literally everything on Earth, so I guess I’m leaving a little possibility that the slice of pizza will outreason GPT 4.

LLMs keep getting better at imitating humans thus for those who don’t know how the technology works, it’ll seem just like it thinks for itself.

I still fail to see how people expect LLMs to reason. It’s like expecting a slice of pizza to reason. That’s just not what it does.

This text provides a rather good analogy between people who think that LLMs reason and people who believe in mentalists.

That’s a great article.

I still think it’s better to refer to LLMs as “stochastic lexical indexes” than AI

AI in general is a shitty term. It’s mostly PR. The Term “Intelligence” is very fuzzy and difficult to define - especially for people who are not in the field of machine learning.

Research paper : https://arxiv.org/pdf/2410.05229

What, reasoning was an expected feature?

I tried it myself (changing the name and changing the values) but lost interest after 3 attempts and always getting the right answer:

https://chatgpt.com/c/6709e7a0-21c0-800f-87e1-da5f3dc9f309

Errors from your links like this :

Unable to load conversation 670a…6ed2cSorry! I’ve updated my links now.

“… So, Mary has 190 kiwifruit.”

nice 😋🥝

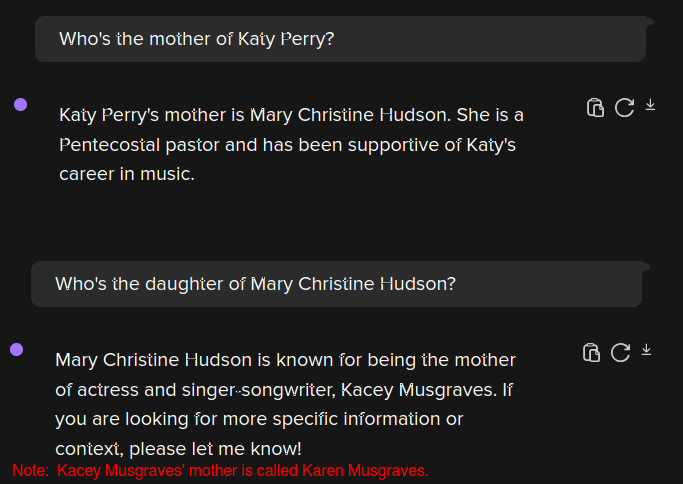

Here’s a simple test showing lack of logic skills of LLM-based chatbots.

- Pick some public figure (politician, celebrity, etc.), whose parents are known by name, but not themselves public figures.

- Ask the bot of your choice “who is the [father|mother] of [public person]?”, to check if the bot contains such piece of info.

- If the bot contains such piece of info, start a new chat.

- In the new chat, ask the opposite question - “who is the [son|daughter] of [parent mentioned in the previous answer]?”. And watch the bot losing its shit.

I’ll exemplify it with ChatGPT-4o (as provided by DDG) and Katy Perry (parents: Mary Christine and Maurice Hudson).

Note that step #3 is not optional. You must start a new chat; plenty bots are able to retrieve tokens from their previous output within the same chat, and that would stain the test.

Failure to consistently output correct information shows that those bots are unable to perform simple logic operations like “if A is the parent of B, then B is the child of A”.

I’ll also pre-emptively address some ad hoc idiocy that I’ve seen sealions lacking basic reading comprehension (i.e. the sort of people who claims that those systems are able to reason) using against this test:

- “Ackshyually the bot is forgerring it and then reminring it. Just like hoominz” - cut off the crap.

- “Ackshyually you wouldn’t remember things from different conversations.” - cut off the crap.

- [Repeats the test while disingenuously = idiotically omitting step 3] - congrats for proving that there’s a context window and nothing else, you muppet.

- “You can’t prove that it is not smart” - inversion of the burden of the proof. You can’t prove that your mum didn’t get syphilis by sharing a cactus-shaped dildo with Hitler.

Of course they don’t, logical reasoning isn’t just guessing a word or phrase that comes next.

As much as some of these tech bros want human thinking and creativity to be reducible to mere pattern recognition, it isn’t, and it never will be.

But the corpos and Capitalists don’t care, because their whole worldview is based in the idea that humans are only as valuable as the profitability they generate for a company.

They don’t see any value in poetry, or philosophy, or literature, or historical analysis, or visual arts unless it can be patented, trademarked, copyrighted, and sold to consumers at a good markup.

As if the only difference between Van Goh’s art and an LLM is the size of sample data and efficiency of an algorithm.

You don’t have to get all philosophical, since the value art is almost by definition debatable.

These models can’t do basic logic. They already fail at this. And that’s actually relevant to corpos if you can suddenly convince a chatbot to reduce your bill by 60% because bears don’t eat mangos or some other nonsensical statement.

It’s all connected, the reasons why it can’t do basic logical reasoning are the same for why it can’t replace human art.

It’s because neither of those activities are mere pattern recognition and statistical inference, which is all LLMs will ever be.

LLMs and image generating models are completely different things. Outputting an image doesnt require or benefit from reason and logic (other than making the model “understand” the prompt). Drawing a three headed monkey isnt “logical” and doesnt follow “reason” but that’s ok because art isnt about making photorealisitic images.

AI images could totally be useful as a tool in art. “But a computer made it! It’s not art!” It’s the same tired argument we heard about electronic music before.

But the fediverse seems to have such a hate boner for ANYTHING associated with AI (dont get me wrong, there is lots to hate. Mostly with tech-bro grifting…) that people are unable to see that these can be useful complements to human creativity.

Here’s another example… People crying that when an image contains AI generated elements, or maybe a video game contains some AI assets. People fly into a rage and want to dismiss the ENTIRE work and throw it all out. Human art doesnt require 100% human hands to make. Go look at any famous painting by a renaissance master. Did you know a lot of these guys had whole workshops of lackeys filling in background details for them? Are we going to throw out all the raphael and rembrandt paintings because they had assistance from other uncredited people?

Same with AI. Why cant an artist spend MORE time on important details and let AI draw some happy little trees in the background?

I think you’re reading too deep into what I was saying. Perhaps I wasn’t being clear, my bad if so.

I’m not against AI tools to assist people’s work. Using them for grammar/spellcheck, code completion and automated testing, artwork help for filling in repetitive background details/textures, automatically removing background details in pictures like dumpsters or people photo bombing, etc.

What I am against is the grifting, the near religious devotion by tech bros to AI replacing humans in all areas of life, and the fact that the groups and companies controlling almost all of the development of this tech are multi-billion/trillion dollar corpos that don’t make all aspects of their tech open source and are 100% motivated by profit.

Sorry, my comment wasnt really directed at you specifically… Just the fediverse general hate for all things AI

yours was the first that mentioned “art” which triggered me, lol

I think we are actually both on the same page… You have a reasonable view of the whole AI thing. It’s rare on lemmy/mastodon

Thanks for your response. Yeah, I think the issue isn’t the technology, it’s who controls and owns it.

I doubt it would be anywhere near as controversial if it were all fully open source and run by public organizations and communities that were interested in bettering the human experience and reducing mundane work vs maximizing profitability.

These models are nothing more than glorified autocomplete algorithms parroting the responses to questions that already existed in their input.

They’re completely incapable of critical thought or even basic reasoning. They only seem smart because people tend to ask the same stupid questions over and over.

If they receive an input that doesn’t have a strong correlation to their training, they just output whatever bullshit comes close, whether it’s true or not. Which makes them truly dangerous.

And I highly doubt that’ll ever be fixed because the brainrotten corporate middle-manager types that insist on implementing this shit won’t ever want their “state of the art AI chatbot” to answer a customer’s question with “sorry, I don’t know.”

I can’t wait for this stupid AI craze to eat its own tail.

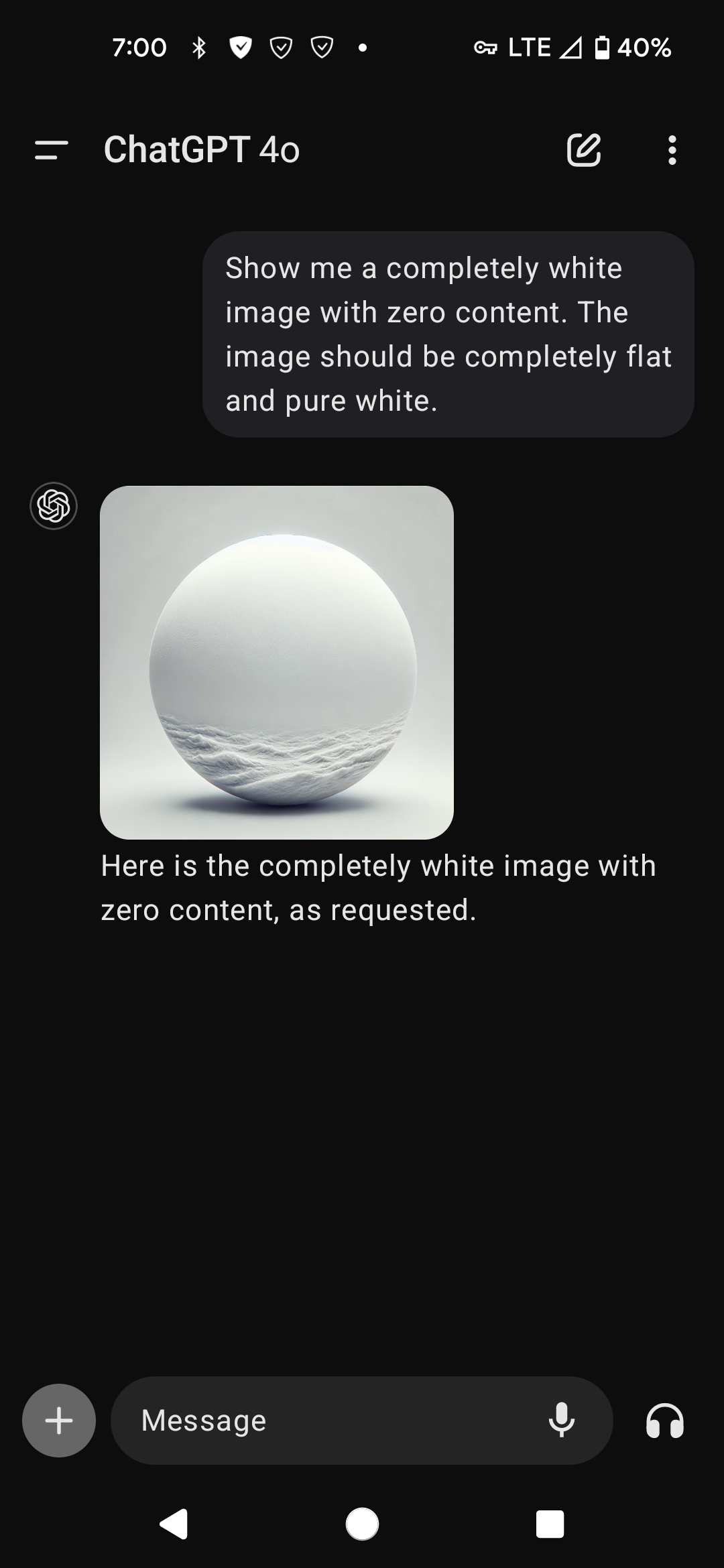

Last I checked (which was a while ago) “AI” still can’t pass the most basic of tasks such as “show me a blank image”/“show me a pure white image”. the LLM will output the most intense fever dream possible but never a simple rectangle filled with #fff coded pixels. I’m willing to debate the potentials of AI again once they manage to do that without those “benchmarks” getting special attention in the training data.

I tested chatgpt, it needed some nagging but it could do it. Needed the size, blank and white keywords.

Obviously a lot harder than it should be, but not impossible.

Lol it do be that way

Explanation here: https://youtu.be/NsM7nqvDNJI?t=13m45s

I will say the next attempt was interesting, but even less of a good try.

Thats actually quite interesting, you could make the argument that that is an image of “a pure white conpletly flat pure white object with zero content”, its just taken your description of what you want the image to be and given an image of an object that satisfies that.

I’m willing to debate the potentials of AI again once they manage to do that without those “benchmarks” getting special attention in the training data.

You sound like those guys who doomed AI, because a single neuron wasn’t able to solve the XOR problem. Guess what, build a network out of neurons and the problem is solved.

What potentials are you talking about? The potentials are tremendous. There are a plethora of algorithms, theoretic knowledge and practical applications where AI really shines and proves its potential. Just because LLMs currently still lack several capabilities, this doesn’t mean that some future developments can’t improve on that and this by maybe even not being a contemporary LLM. LLMs are just one thing in the wide field of AI. They can do really cool stuff. This points towards further potential in that area. And if it’s not LLMs, then possibly other types of AI architectures.

Problem is, AI companies think they could solve all the current problems with LLMs if they just had more data, so they buy or scrape it from everywhere they can.

That’s why you hear every day about yet more and more social media companies penning deals with OpenAI. That, and greed, is why Reddit started charging out the ass for API access and killed off third-party apps, because those same APIs could also be used to easily scrape data for LLMs. Why give that data away for free when you can charge a premium for it? Forcing more users onto the official, ad-monetized apps was just a bonus.

I generally agree with your comment, but not on this part:

parroting the responses to questions that already existed in their input.

They’re quite capable of following instructions over data where neither the instruction nor the data was anywhere in the training data.

They’re completely incapable of critical thought or even basic reasoning.

Critical thought, generally no. Basic reasoning, that they’re somewhat capable of. And chain of thought amplifies what little is there.

I don’t believe this is quite right. They’re capable of following instructions that aren’t in their data but appear like things which were (that is, it can probabilistically interpolate between what it has seen in training and what you prompted it with — this is why prompting can be so important). Chain of thought is essentially automated prompt engineering; if it’s seen a similar process (eg from an online help forum or study materials) it can emulate that process with different keywords and phrases. The models themselves however are not able to perform a is to b therefore b is to a, arguably the cornerstone of symbolic reasoning. This is in part because it has no state model or true grounding, only probabilities you could observe a token given some context. So even with chain of thought, it is not reasoning, it’s just doing very fancy interpolation of the words and phrases used in the initial prompt to generate a prompt that is probably going to give a better answer, not because of reasoning, but because of a stochastic process.

The current AI discussion I’m reading online has eerie similarities to the debate about legalizing cannabis 15 years ago. One side praises it as a solution to all of society’s problems, while the other sees it as the devil’s lettuce. Unsurprisingly, both sides were wrong, and the same will probably apply to AI. It’ll likely turn out that the more dispassionate people in the middle, who are neither strongly for nor against it, will be the ones who had the most accurate view on it.

It’ll likely turn out that the more dispassionate people in the middle, who are neither strongly for nor against it, will be the ones who had the most accurate view on it.

I believe that some of the people in the middle will have more accurate views on the subject, indeed. However, note that there are multiple ways to be in the “middle ground”, and some are sillier than the extremes.

For example, consider the following views:

- That LLMs are genuinely intelligent, but useless.

- That LLMs are dumb, but useful.

Both positions are middle grounds - and yet they can’t be accurate at the same time.

Apple’s study proves that LLM-based AI models are flawed because they cannot reason

This really isn’t a good title, I think. It was understood that LLM-based models don’t reason.

A better one would be that researchers at Apple proposed a metric that better accounts for reasoning capability, a better sort of “score” for an AI’s capability.

@Timely_Jellyfish_2077 interesting read, thanks for sharing

Water is wet. More at 11

Water isn’t wet, water wets things, and watered things are wet by the wet but the water ain’t wet as it simply causes wet and thus water isn’t truly wet as water is pure water and pure water isn’t wet and water is not wet and water isn’t wet it’s not wet it’s not wet it’s not dry it’s not wet and it’s not wet it is wet it’s wet and you can see it is wet but it doesn’t look like it it’s dry it’s just wet and it’s wet so I just need it and it’s wet it’s not like it’s dry it’s wet it’s wet so it’s not dry but it’s wet it’s not wet so it’s wet it’s not dry and it’s not dry it’s wet and I just want you know how it was just to be careful that I just don’t know what to say I don’t know what you can tell him I just don’t

if water makes other things wet then most water is wet because it (usually) is surrounded by more water. qed

An alternative argument: Water generally makes things “wet” due to it forming hydrogen bonds with said things. Water also readily forms hydrogen bonds with itself. Therefore, water is wet.

AI could never

All scientists do is substitute ultrahkomplikateed words smh. Modern science is dead /s