ChatGPT is dismissing it, but I’m not so sure.

Seriously, do not use LLMs as a source of authority. They are stochistic machines predicting the next character they type; if what they say is true, it’s pure chance.

Use them to draft outlines. Use them to summarize meeting notes (and review the summaries). But do not trust them to give you reliable information. You may as well go to a party, find the person who’s taken the most acid, and ask them for an answer.

yeah, that’s why I’m here, dude.

So then, if you knew this, why did you bother to ask it first? I’m kinda annoyed and jealous of your AI friend over there. Are you breaking up with me?

I doubted chatgpts input and I came here looking for help. What are you on about?

Dude, people here are such fucking cunts, you didn’t do anything wrong, ignore these 2 trogledytes who think they are semi intelligent. I’ve worked in IT nearly my whole life. I’d return it if you can.

Defensive… If someone asks you for advice, and says they have doubts about the answer they received from a Magic 8-Ball, how would you feel?

Very Doubtful

You don’t count, you would simply feel like a loaf.

First sentence of each paragraph: correct.

Basically all the rest is bunk besides the fact that you can’t count on always getting reliable information. Right answers (especially for something that is technical but non-verifiable), wrong reasons.

There are “stochastic language models” I suppose (e.g., click the middle suggestion from your phone after typing the first word to create a message), but something like chatgpt or perplexity or deepseek are not that, beyond using tokenization / word2vect-like setups to make human readable text. These are a lot more like “don’t trust everything you read on Wikipedia” than a randomized acid drop response.

Yes, definitely.

Inside the nominal return period for a device absolutely.

If it’s a warranty repair I’ll wait for an actual trend, maybe run a burn-in on it and force its hand.

Within return/rma window: Yes? Why not?

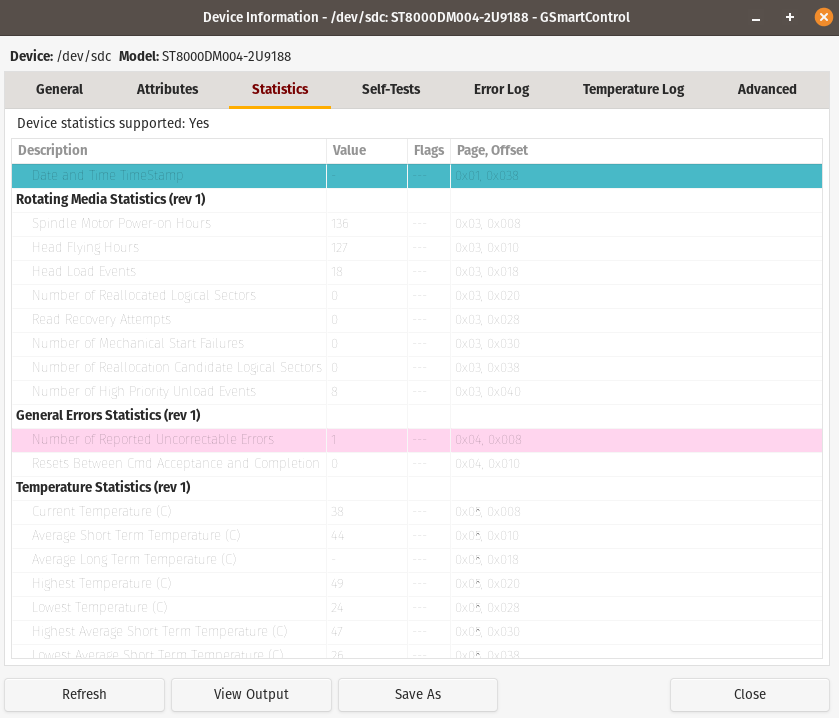

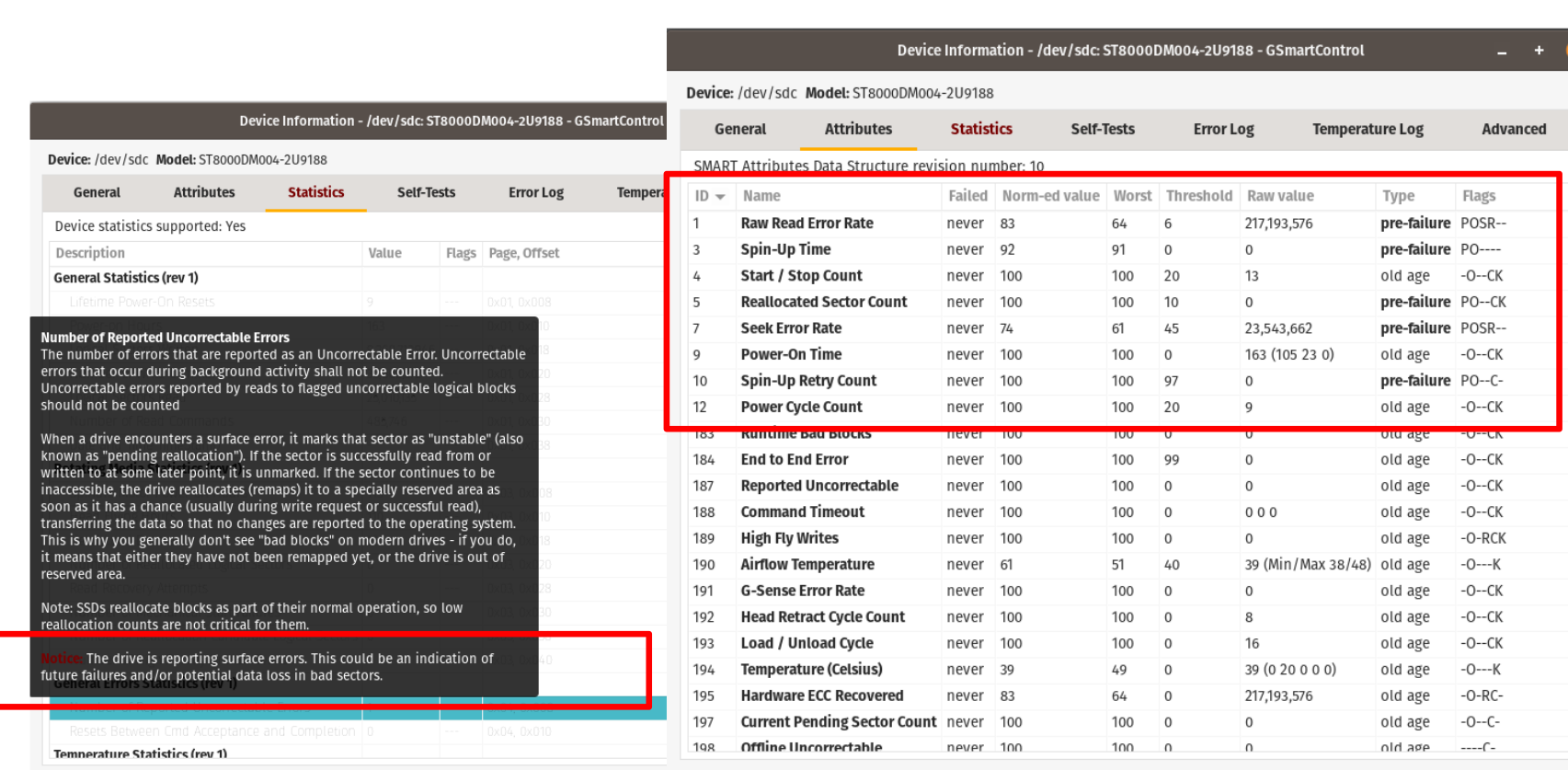

SMART data can be hard to read. But it doesn’t look like any of the normalized values are approaching the failure thresholds. It doesn’t show any bad sectors. But it does show read errors.

I would check the cable first, make sure it’s securely connected. You said it clicks sometimes, but that could be normal. Check the kernel log/dmesg for errors. Keep an eye on the SMART values to see if they’re trending towards the failure thresholds.

Im sorry but you wanted to know about your warranty info and you went to chatgpt instead of the manufacturer or seller of the drive…