AI absolutely has the potential to enable great things that people want, but that’s completely outweighed by the way companies are developing them just for profit and for eliminating jobs

Capitalism can ruin anything, but that doesn’t make the things it ruins intrinsically bad

I used to be so excited about tech announcements. Like…I should be pumped for ai stuff. Now I immediately start thinking about how they are going to use the thing to turn a profit by screwing us over. Can they harvest data with it? Can they charge a subscription for that? I’m getting so jaded.

AI absolutely has the potential to enable great things that people want

The current implementation of very large data sets obtained through web scrapping, very aggressive marketing of these services such that the results pollute existing online data sets, and the refusal to tag AI generated content as such in order to make filtering it out virtually impossible is not going to enable great things.

This is just spam with the dial turned up to 11.

Capitalism can ruin anything

There’s definitely an argument that privatization and profit motive have driven the enormous amounts of waste and digital filth being churned out at high speeds. But I might go farther and say there are very specific bad actors - people who are going above simply seeking to profiteer and genuinely have mega-maniacal delusions of AGI ruling the world - who are rushing this shit out in as clumsy and haphazard a manner as possible. We’ve also got people using AI as a culpability shield (Israel’s got Lavendar, I’m sure the US and China and a few other big military superpowers have their equivalents).

This isn’t just capitalism seeking ever-higher rents. Its delusional autocrats believing they can leverage sophisticated algorithms to dictate the life and death of billions of their peers.

These are ticking time bombs. They aren’t well regulated or particularly well-understood. They aren’t “intelligent”, either. Just exceptionally complex. That complexity is both what makes them beguiling and dangerous.

that doesn’t make the things it ruins intrinsically bad

Hmmm tricky, see for example https://thenewinquiry.com/super-position/ where capitalism is very good at transforming everything and anything, including culture in this example, to preserve itself while making more money for the few. It might not indeed change good things to bad once they already exist, but it can gradually change good things to new bad things while attempting to make them look like the good old ones it replaces.

Corporate is pushing AI. It’s laughably bad. They showed off this automated test writing platform from Meta. That utility, out of 100 runs, had a success rate of 25%. And they were touting how great it was. Entirely embarrassing.

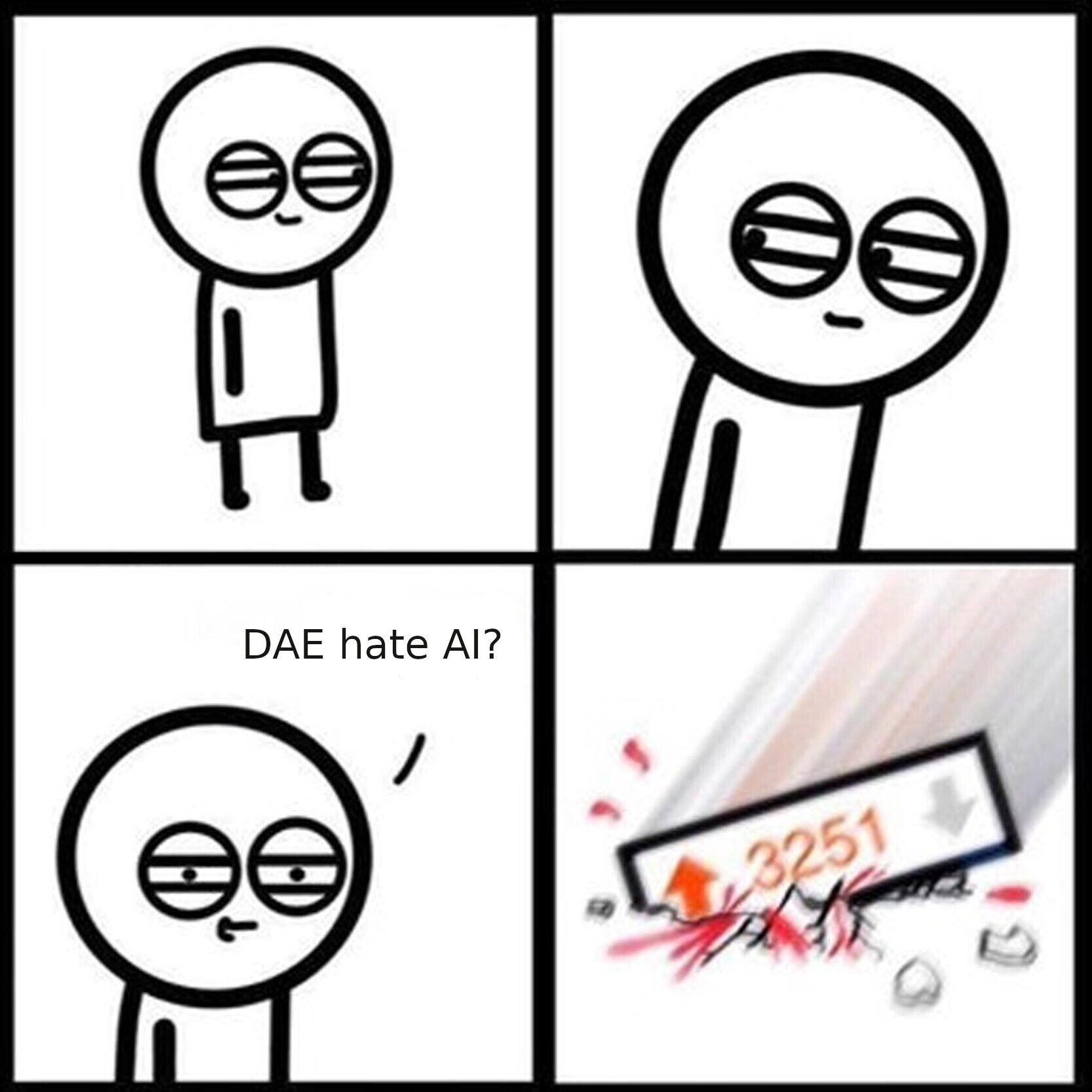

People leaving pro-AI comments in !fuck_ai@lemmy.world lmao

I thought echo chambers were a bad thing?

I also thought echo chambers were a bad thing.

Yeah right ?! glad we agree on this. Damn, we should hang out more often

Mom says it’s my turn to repost this tomorrow.

deleted by creator

I mean… Shouldn’t we start differentiating generative AI from stuff that’s actually useful like computer vision?

My head cannon is that AI = LLMS and machine learning = actually useful “artificial intelligence”

That’s the way most discussion trends right now. Blame the tech bros and investors chasing a buck for killing the term AI.

Apple Intelligence*

Maybe we can start referring to AI devices as Genius Bars.

Absolutely! I hate cool and useful shit like OCR (optical character recognition for anyone who doesn’t know) getting mixed with

content theftgenerative AIThat would be nice. If you’re talking about generative AI, then call it “generative AI” instead of simply “AI”.

It’s why only open source ai should exist

but it allows for some people to type out one-liners and generate massive blobs of text at the same time that they could be doing their jobs. /codecraft

Ethical

AI tools aren’t inherently unethical, and even the ones that use models with data provenance concerns (e.g., a tool that uses Stable Diffusion models) aren’t any less ethical than many other things that we never think twice about. They certainly aren’t any less ethical than tools that use Google services (Google Analytics, Firebase, etc).

There are ethical concerns with many AI tools and with the creation of AI models. More importantly, there are ethical concerns with certain uses of AI tools. For example, I think that it is unethical for a company to reduce the number of artists they hire / commission because of AI. It’s unethical to create nonconsensual deepfakes, whether for pornography, propaganda, or fraud.

Environmentally sustainable

At least people are making efforts to improve sustainability. https://hbr.org/2024/07/the-uneven-distribution-of-ais-environmental-impacts

That said, while AI does have energy a lot of the comments I’ve read about AI’s energy usage are flat out wrong.

Great things

Depends on whom you ask, but “Great” is such a subjective adjective here that it doesn’t make sense to consider it one way or the other.

things that people want

Obviously people want the things that AI tools create. If they didn’t, they wouldn’t use them.

well-meaning

Excuse me, Sam Altman is a stand-up guy and I will not have you besmirching his name /s

Honestly my main complaint with this line is the implication that the people behind non-AI tools are any more well-meaning. I’m sure some are, but I can say the same with regard to AI. And in either case, the engineers and testers and project managers and everyone actually implementing the technology and trying to earn a paycheck? They’re well-meaning, for the most part.

Lemmy

Edit: Oh shit. I didn’t realize this whole community is just for this… oh well.

Ah! I thought you guys wanted cheap, fast, and most importantly interpretable cell state determination from single cell sequencing data. My bad.

I would disagree with the answers to all those questions