Interesting idea. I am eager to see whether this can be further confirmed or not.

Interesting idea. I am eager to see whether this can be further confirmed or not.

The worst thing about this all:

As for Beauchamp, he still doesn’t have his package.

When deputies first arrived at the scene, they realized the restaurant’s doors were locked, despite employees still being inside. The employees unlocked the doors for the deputies and explained that many upset customers would act out violently or even resort to talking, so they were just trying to be safe, according to the video.

Oh no, the customers might resort to talking! Quick! Lock the doors!

Did you sue them?

Tell the US government about it. Then they will bring you democracy and liberate you from the oppressive oil. /j

God forbid people have some self expression

They do indeed forbid it.

10 "If you go to battle against your enemies, and the LORD your God delivers them into your control, you may take some prisoners captive. 11 If you see among the prisoners a beautiful woman and you desire her, then you may take her as your wife. 12 Bring her to your house, but shave her head and trim her nails

Deuteronomy 21

Oh man, religions are batshit crazy.

Totally approrpiate, since they’re terrorists endangering the well-being of everyone and the planet. Wait…

There are alarm clock apps which can help. You may configure how unforgiving the alarm becomes.

https://play.google.com/store/apps/details?id=com.kog.alarmclock

Changed my life.

Rebranding a Markov Chain stapled onto a particularly large graph

Could you elaborate how this applies to various areas of AI in your opinion?

Several models are non-markovian. Then there are also a lot of models and algorithms, where the description as or even comparison to Markov-chains would be incorrect and not suitable.

2024 - 22 = 2002.

So probably not nazis. No.

It already read like one of those “and then they all clapped” posts.

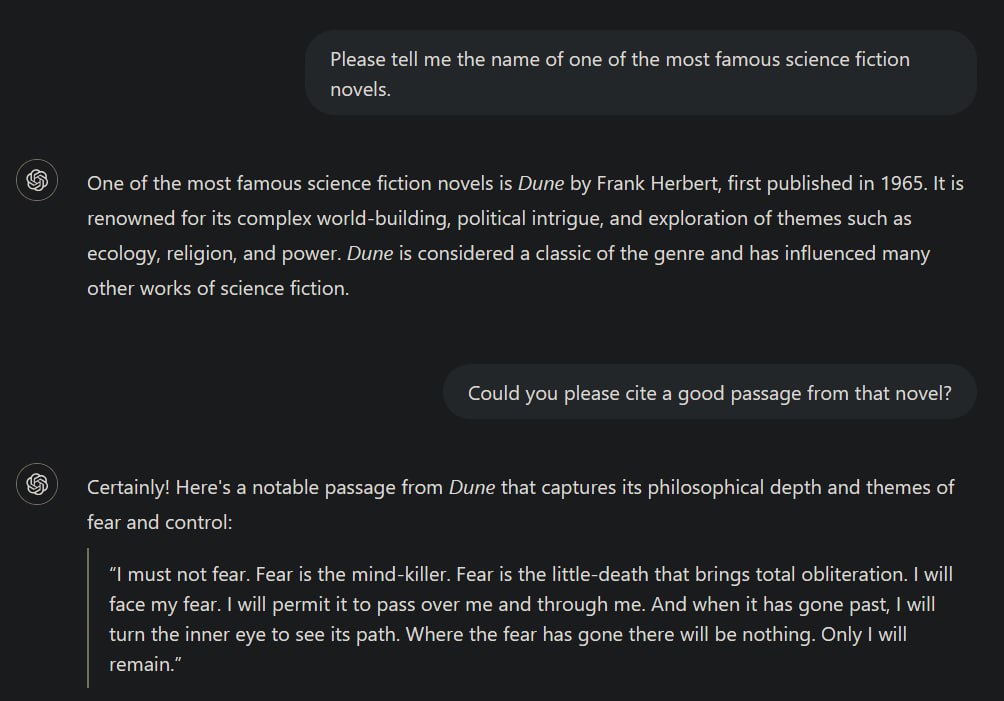

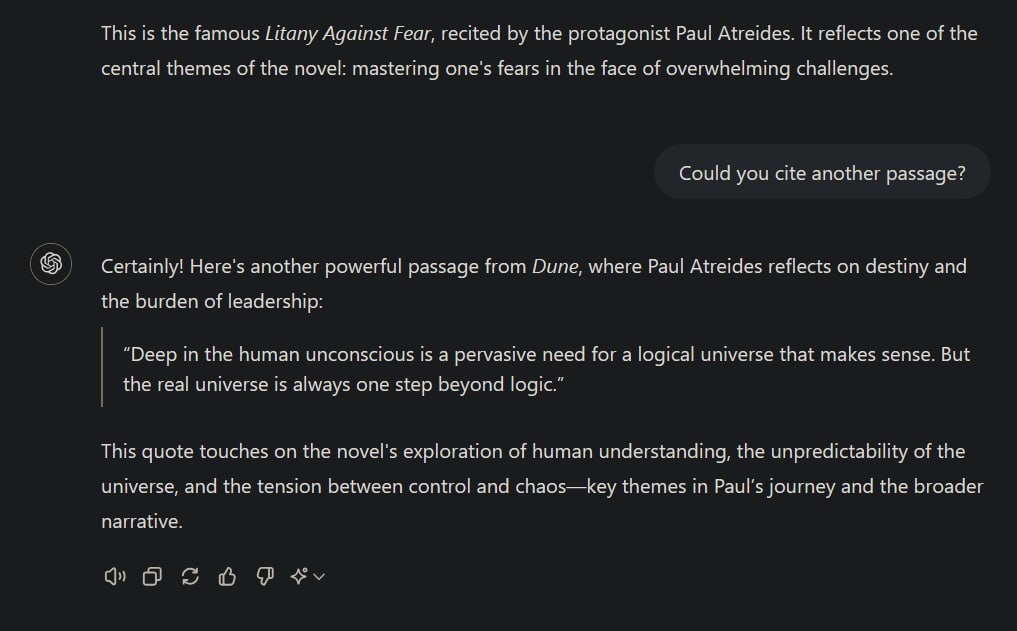

My point is, that the following statement is not entirely correct:

When AI systems ingest copyrighted works, they’re extracting general patterns and concepts […] not copying specific text or images.

One obvious flaw in that sentence is the general statement about AI systems. There are huge differences between different realms of AI. Failing to address those by at least mentioning that briefly, disqualifies the author regarding factual correctness. For example, there are a plethora of non-generative AIs, meaning those, not generating texts, audio or images/videos, but merely operating as a classifier or clustering algorithm for instance, which are - without further modifications - not intended to replicate data similar to its inputs but rather provide insights.

However, I can overlook this as the author might have just not thought about that in the very moment of writing.

Next:

While it is true that transformer models like ChatGPT try to learn patterns, the most likely token for the next possible output in a sequence of contextually coherent data, given the right context it is not unlikely that it may reproduce its training data nearly or even completely identically as I’ve demonstrated before. The less data is available for a specific context to generalise from, the more likely it becomes that the model just replicates its training data. This is in principle fine because this is what such models are designed to do: draw the best possible conclusions from the available data to predict the next output in a sequence. (That’s one of the reasons why they need such an insane amount of data to be trained on.)

This can ultimately lead to occurences of indeed “copying specific texts or images”.

but the fact that you prompted the system to do it seems to kind of dilute this point a bit

It doesn’t matter whether I directly prompted it for it. I set the correct context to achieve this kind of behaviour, because context matters most for transformer models. Directly prompting it do do that was just an easy way of setting the required context. I’ve occasionally observed ChatGPT replicating identical sentences from some (copyright-protected) scientific literature when I used it to get an overview over some specific topic and also had books or papers about that on hand. The latter demonstrates again that transformers become more likely to replicate training data the more “specific” a context becomes, i.e., having significantly less training data available for that context than about others.

When AI systems ingest copyrighted works, they’re extracting general patterns and concepts - the “Bob Dylan-ness” or “Hemingway-ness” - not copying specific text or images.

Okay.

Coding is already dead. Most coders I know spend very little time writing new code.

Oh no, I should probably tell this my whole company and all of their partners. We’re just sitting around getting paid for nothing apparently. I’ve never realised that. /s

While I highly doubt that becoming true for at least a decade, we can already replace CEOs by AI, you know? (:

https://www.independent.co.uk/tech/ai-ceo-artificial-intelligence-b2302091.html

About blue whales I watched an educational video where it’s said that they indeed do have cancer, but that it barely matters to them due to their enourmous size. I wonder whether it’s something similar with elephants.

I wonder how it must have been like to be the one who thought one day, “I’ll pick coffee beans out of monkey shit and drink that shit!”

There were no ads in the UI of the TV though.

Thank you. <3

Close one eye or put an eyepatch on. I’d expect this makes 3D -> 2D transformations easier after a while.