French immigrants are eating our pets!

Ignorant Americans, never even heard of the common snailcat

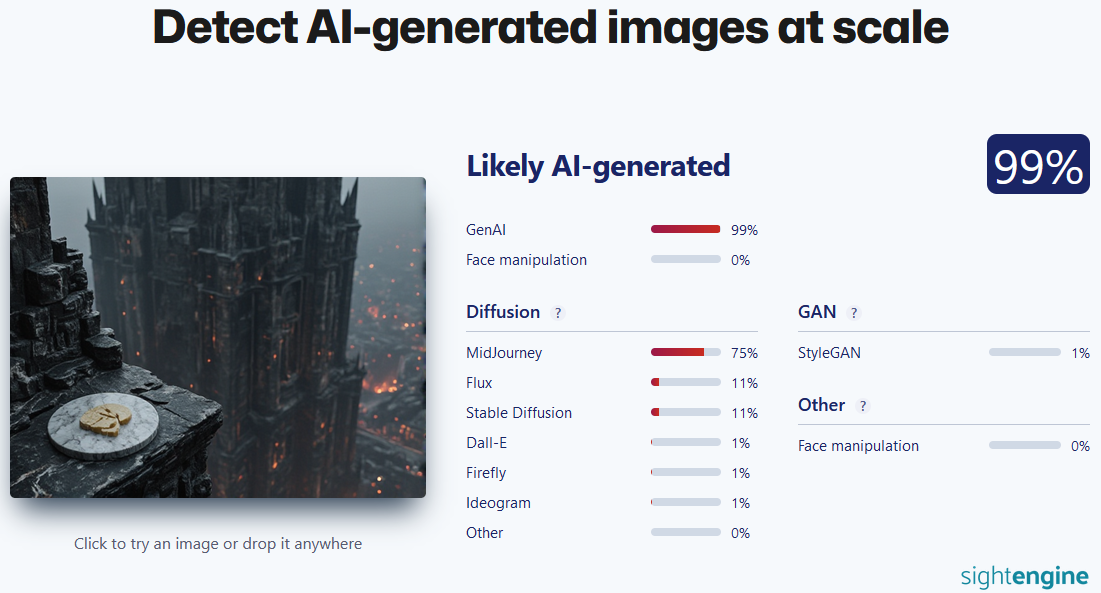

I don’t get it. Maybe it’s right? Maybe a human made this?

The picture doesn’t have to be “real”, it just has to be non-AI. Maybe this was made in Blender and Photoshop or something.

Check the snail house. The swirl has two endings. Definitely AI

deleted by creator

I have two of those cats. I still can’t catch them when its time to go to bed.

No spooky eyes, no extra limbs, no eery smile? - 100% real, genuine photograph! 👍

cute snat…

That’s clearly a cail

We get them a lot around here. They don’t make for good pets, but they keep the borogoves at bay.

Which is great, honestly. Borogoves themselves are fine, but it’s not worth the risk letting them get all mimsy.

Such a cute kitty snail! Can you post just the picture?

Its an “AI generated image at snail” :3

Detection for snail is positive.

It’s a snat. They are not easy to catch, because they are fast. Also, they never land on their shell.

Clearly AI is on the verge of taking over the world.

Chat is the picture real?

It’s a real valid picture in png format.

Oui, biensur!

HAS SCIENCE GONE TO FAR?!

That is a weird looking rabbit

honestly, its pretty good, and it still works if I use a lower resolution screenshot without metadata (I haven’t tried adding noise, or overlaying something else but those might break it). This is pixelwave, not midjourney though.

It has been

0days since classified military gene research has been leaked by interrogating ai detecting modelsSo only 2% “not likely to be AI-generated or deepfake”… that means that it’s almost definitely AI, got it!? :-P

There are a bunch of reasons why this could happen. First, it’s possible to “attack” some simpler image classification models; if you get a large enough sample of their outputs, you can mathematically derive a way to process any image such that it won’t be correctly identified. There have also been reports that even simpler processing, such as blending a real photo of a wall with a synthetic image at very low percent, can trip up detectors that haven’t been trained to be more discerning. But it’s all in how you construct the training dataset, and I don’t think any of this is a good enough reason to give up on using machine learning for synthetic media detection in general; in fact this example gives me the idea of using autogenerated captions as an additional input to the classification model. The challenge there, as in general, is trying to keep such a model from assuming that all anime is synthetic, since “AI artists” seem to be overly focused on anime and related styles…