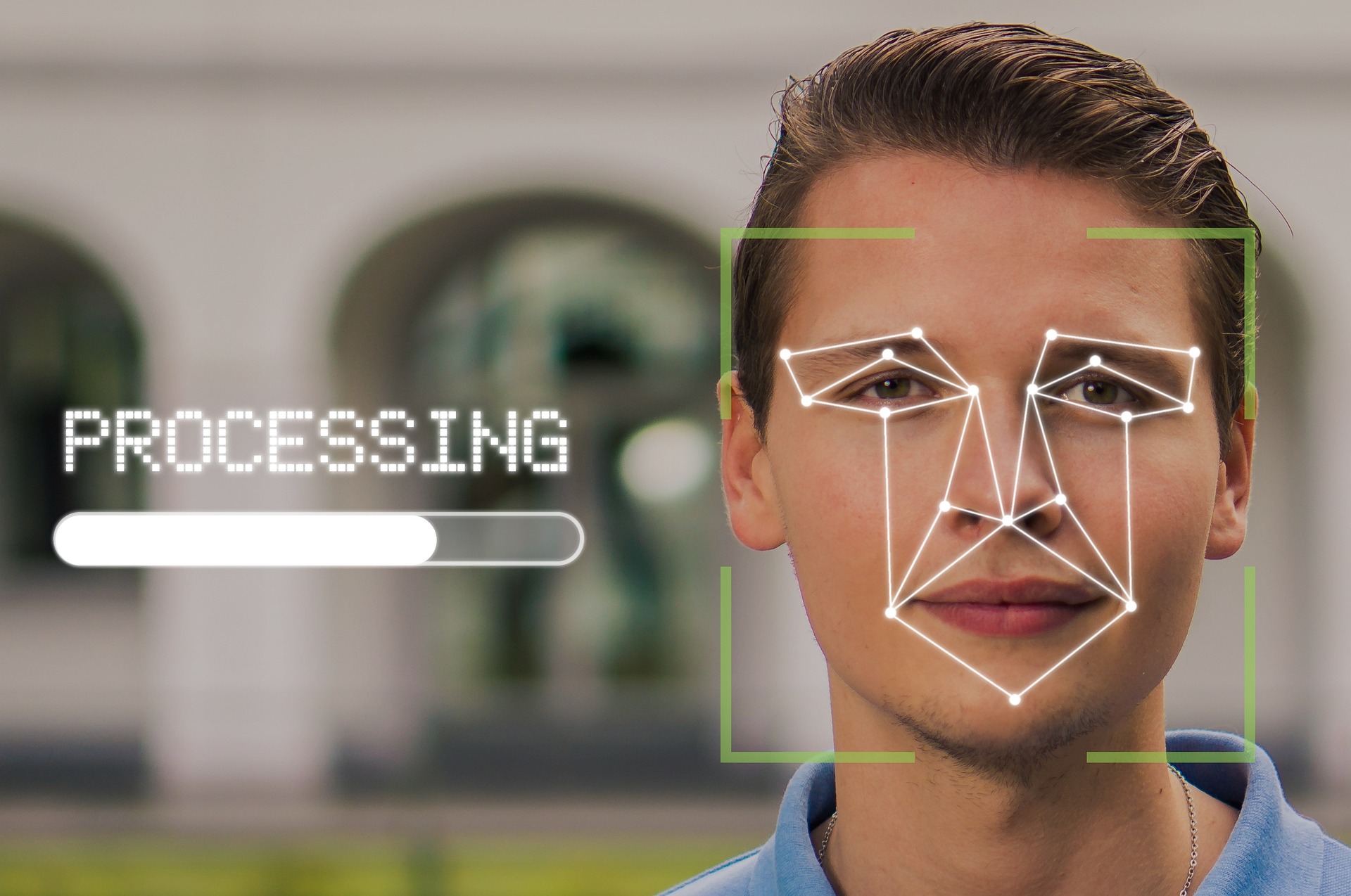

A big biometric security company in the UK, Facewatch, is in hot water after their facial recognition system caused a major snafu - the system wrongly identified a 19-year-old girl as a shoplifter.

Even if someone did steal a mars-bar… Banning them from all food-selling establishments seems… Disproportional.

Like if you steal out of necessity, and get caught once, you then just starve?

Obviously not all grocers/chains/restaurants are that networked yet, but are we gonna get to a point where hungry people are turned away at every business that provides food, once they are on “the list”?

get caught once, you then just starve?

Maybe they send you to Australia again?

The world hasn’t changed has it.

Sure it has. They send you to Rwanda now

Like if you steal out of necessity, and get caught once, you then just starve?

I mean… you could try getting on food stamps or whatever sort of government assistance is available in your country for this purpose?

In pretty much all civilized western countries, you don’t HAVE to resort to becoming a criminal simply to get enough food to survive. It’s really more of a sign of antisocial behavior, i.e. a complete rejection of the system combined with a desire to actively cause harm to it.

Or it could be a pride issue, i.e. people not wanting to admit to themselves that they are incapable of taking care of themselves on their own and having to go to a government office in order to “beg” for help (or panhandle outside the supermarket instead).

They’ve essentially created their own privatized law enforcement system. They aren’t allowed to enforce their rules the same way a government would be, but punishment like banning a person from give swaths of economic can still be severe. The worst part is that private legal systems almost never have any concept of rights or due process, so there is absolutely nothing stopping them from being completely arbitrary in how they apply their punishments.

I see this kind of thing as being closely aligned with right wingers’ desire to privatize everything, abolish human rights, and just generally turn the world into a dystopian hellscape for anyone who isn’t rich and well connected.

I can see this being used against ex-employees.

If that case ever does exist (god forbid), I hope that there’s something like a free-entry market so they can set up their own food solutions instead of being forced to starve.

If it’s a free market, and every existing business is coordinating to refuse to sell food to this person, then there’s a profit opportunity in getting food to them. You could even charge them double for food, and make higher profits selling to the grocery-banned class, while saving their lives.

That may sound cold-hearted, but what I’m trying to point out is that in this scenario, the profit motive is pulling that food to those people who need it. It’s incentivizing people who otherwise wouldn’t care, and enabling people who do care, to feed those hungry people by channeling money toward the solution.

And that doesn’t require anything specific about food to be in the code that runs the marketplace. All you need is a policy that new entrants to the market are allowed, and without any lengthy waiting process for a permit or whatever. You need a rule that says “You can’t stop other players from coming in an competing with you”, which is the kind of rule you need to run a free market, and then the rest of the problem is solved by people’s natural inclinations.

I know I’m piggybacking here. I’m just saying that a situation in which only some finite cartel of providers gets to decide who can buy food, is an example of a massive violation of free market principals.

People think “free market” means “the market is free to do evil”. No. “Free market” just means the people inside it are free to buy and sell what they please, when they please.

Yes it means stores can ban people. But it also means other people can start stores that do serve those people. It means “I don’t have to deal with you if I don’t want to, but I also can’t control your access to other people”.

A pricing cartel or a blacklisting cartel is a form of market disease. The best prevention and cure is to ensure the market is a free one - one which new players can enter at will - which means you can’t enforce that cartel reliably since there’s always someone outside the blacklisting cartel who could benefit from defecting from the blacklist.

That is some serious “capitalism can solve anything and therefore will, if only we let it”-type brain rot.

This “solution” relies on so many assumptions that don’t even begin to hold water.

Of course any utopian framework for society could deal with every conceivable problem… But in practice they don’t, and always require intentional regulation to a greater or lesser extent in order to prevent harm, because humans are humans.

This particular potential problem is almost certainly not the kind that simply “solves itself” if you let it.

And IMO suggesting otherwise is an irresponsible perpetuation of the kind of thinking that has led human civilization to the current reality of millions starving in the next few decades, due to the predictable environmental destruction of arable land in the near future.

No no, that would be absurd. You’ll also be turned away if you are not on the list if you’re unlucky.

This becomes even more ridiculous if you consider that we wasted about 1.05 billion tonnes of food worldwide in 2022 alone. (UNEP Food Waste Index Report 2024 Key Messages)

But no. Supermarkets will miss out on profits if they ban people from their stores who can’t pay.

Seems illogical? Because it is.

Reminds me of when I joined some classmates to the supermarket. We got kicked out while waiting in line because they didn’t want middleschoolers there because we’re all thieves anyways. So must of the group walked out without paying.

Oh good… janky oversold systems that do a lot of automation on a very shaky basis are also having high impacts when screwing up.

Also “Facewatch” is such an awful sounding company.

We have so many dystopian futures and we decided to invent a new one.

Actually this one feels pretty similar to watch_dogs. Wasn’t this the plot to watch_dogs 2?

Now I’m interested in the plot of watch dogs 2…

Edit: it’s indeed the plot of watch dogs 2

https://en.m.wikipedia.org/wiki/Watch_Dogs_2

In 2016, three years after the events of Chicago, San Francisco becomes the first city to install the next generation of ctOS – a computing network connecting every device together into a single system, developed by technology company Blume. Hacker Marcus Holloway, punished for a crime he did not commit through ctOS 2.0 …

Also, a kickass soundtrack by Hudson Mohawke.

Is it even legal? What happened to consumer protection laws?

I’m going to think twice before enerting any big box store because of this.

I am amazed that anyone is still using them. It is the 21st century, just shop online.

Last time I ordered big boxes online, they just shipped me empty boxes. I don’t know how they screwed that up, but then I’ve always gone to big box stores so I can actually see the big boxes I’m buying.

No need to avoid such stores, just wear a protection to avoid your face from being falsely flagged.

Brexit. The EU has laws forbidding stuff like this.

I don’t think this is legal in Russia, Ukraine and Belarus either.

This is why some UK leaders wanted out of EU, to make their own rules with way less regard for civil rights.

It’s the Tory way. Authoritarianism, culture wars, fucking over society’s poorest.

People who blindly support this type of tech and AI being slapped into everything always learn the hard way when a case like this happens.

Sadly there won’t be any learning, the security company will improve the tech an continue as usual.

This shit is here to stay :/

Agreed on all points, but “improve the tech” probably belongs in quotes. If there’s no real consequences, they may just accept some empty promises and continue as before.

Just listened to a podcast with a couple of guys talking about the AI thing going on. One thing they said was really interesting to me. I’ll paraphrase my understanding of what they said:

- In 2020, people realized that the same model, same architecture, but with more parameters ie a larger version of the model, behaved more intelligently and had a larger set of skills than the same model with fewer parameters.

- This means you can trade money for IQ. You spend more money, get more computing power, and your model will be better than the other guy’s

- Your model being better means you can do more things, replace more tasks, and hence make more money

- Essentially this makes the current AI market a very straightforward money-in-determines-money-out situation

- In other words, the realization that the same AI model, only bigger, was significantly better, created a pathway for reliably investing huge amounts of money into building bigger and bigger models

So basically AI was meandering around trying to find the right road, and in 2020 it found a road that goes a long way in a straight line, enabling the industry to just floor the accelerator.

The direct relationship this model creates between more neurons/weights/parameters on the one hand, and more intelligence on the other, creates an almost arbitrage-easy way to absorb tons of money into profitable structures.

There we go guys. It’s funny when nutty conspiracy theorists are against masks when they should be wearing frikin balaclavas

She got more than she Home Bargained for

I didn’t even have “Phrenology makes a comeback” on my apocalypse bingo card for the 2020s.

Noun - - phrenology

(medicine, biology, historical) The science or now discredited pseudo-science, which studies the relationships between a person’s character and the morphology (structure) of the skull.

https://en.m.wiktionary.org/wiki/phrenology

This can’t be true. I was told that if she has nothing to hide she has nothing to worry about!

Stop giving corporations the power to blacklist us from life itself.

As an American, I don’t know what opinion to have about this without knowing the woman’s race.

If she’s been flagged as shoplifter she’s probably black!

Why, because all shoplifters are black? I don’t understand. She’s being mistaken for another person, a real person on the system.

I used to know a smackhead that would steal things to order, I wonder if he’s still alive and whether he’s on this database. Never bought anything off him but I did buy him a drink occasionally. He’d had a bit of a difficult childhood.

Why, because all shoplifters are black?

All the ones that get caught. When you’re white, you can steal whatever you want.

i think its more that all facial recognition systems have a harder time picking up faces with darker complexion (same idea why phone cameras are bad at it for example). this failure leads to a bunch of false flags. its hilariously evident when you see police jurisdictions using it and aresting innocent people.

not saying the womans of colored background, but would explain why it might be triggering.

Because the algorithm trying to identify shoplifters is probably trained on biased dataset

The developers should be looking at jail time as they falsely accused someone of commiting a crime. This should be treated exactly like if I SWATed someone.

I’m not so sure the blame should solely be placed on the developers - unless you’re using that term colloquially.

Developers were probably the first people to say that it isn’t ready. Blame the sales people that will say anything for money.

They worked on it, they knew what could happen. I could face criminal charges if I do certain things at work that harm the public.

I have no idea where Facewatch got their software from. The developers of this software could’ve been told their software will be used to find missing kids. Not really fair to blame developers. Blame the people on top.

It says right on their webpage what they are about.

Developers don’t always work directly for companies. Companies pivot.

It’s impossible to have a 0% false positive rate, it will never be ready and innocent people will always be affected. The only way to have a 0% false positive rate is with the following algorithm:

def is_shoplifter(face_scan):

return Falseline 2 return False ^^^^^^ IndentationError: expected an indented block after function definition on line 1In their defense, it didn’t return a false positive

Weird, for me the indentation renders correctly. Maybe because I used Jerboa and single ticks instead of triple ticks?

Interesting. This is certainly not the first time there have been markdown parsing inconsistencies between clients on Lemmy, the most obvious example being subscript and superscript, especially when ~multiple words~ ^get used^ or you use ^reddit ^style ^(superscript text).

But yeah, checking just now on Jerboa you’re right, it does display correctly the way you did it. I first saw it on the web in lemmy-ui, which doesn’t display it properly, unless you use the triple backticks.

You can arrest their managers as well, good point.

I get your point but totally disagree this is the same as SWATing. People can die from that. While this is bad, she was excluded from stores, not murdered

You lack imagination. What happens when the system mistakenly identifies someone as a violent offender and they get tackled by a bunch of cops, likely resulting in bodily injury.

That would then be an entirely different situation?

No, it wouldn’t be. The base circumstance is the same, the software misidentifying a subject. The severity and context will vary from incident to incident, but the root cause is the same - false positives.

There’s no process in place to prevent something like this going very very bad. It’s random chance that this time was just a false positive for theft. Until there’s some legislative obligation (such as legal liability) in place to force the company to create procedures and processes for identifying and reviewing false positives, then it’s only a matter of time before someone gets hurt.

You don’t wait for someone to die before you start implementing safety nets. Or rather, you shouldn’t.

That’s not very reassuring, we’re still only one computer bug away from that situation.

Presumably she wasn’t identified as a violent criminal because the facial recognition system didn’t associate her duplicate with that particular crime. The system would be capable of associating any set of crimes with a face. It’s not like you get a whole new face for each different possible crime. So, we’re still one computer bug away from seeing that outcome.

People should be thrown in jail over a hypothetical?

This happens in the USA without face recognition

Difference in degree not kind

In the UK at least a SWATing would be many many times more deadly and violent than a normal police interaction. Can’t make the same argument for the USA or Russia, though.

Did the post office let them borrow their tech?