Remember DVI?

Hell, remember dot-matrix printer terminals?

Remember? I still use it for my second monitor. My first interaction with DVI was also on that monitor, probably 10-15 years ago at this point. Going from VGA to DVI-D made everything much clearer and sharper. I keep using this setup because the monitor has a great stand and doesn’t take up much space with its 4:3 aspect ratio. 1280x1024 is honestly fine for having voice chat, Spotify, or some documentation open.

Hell yeah. My secondary monitor is a 1080p120 shitty TN panel from 2011. I remember the original stand had a big “3D” logo because remember those NVIDIA shutter glasses?

Connecting it is a big sturdy DVI-D cable that, come to think of it, is older than my child, my cars, and any of my pets.

My monitor is 16 years old (1080p and that’s enough for me), I can use dvi or HDMI. The HDMI input is not great when using a computer with that specific model.

So I’ve been using DVI for 16 years.

VGA and DVI honestly were both killed off way too soon. Both will perfectly drive a 1080p 60fps display and honestly the only reason to use HDMI or Displayport with such a display is if that matches your graphics output

The biggest shame is that DVI didn’t take off with its dual monitor features or USB features. Seriously there was a DVI Dual Link with USB spec so you could legitimately use a single cable with screws to prevent accidental disconnects to connect your computer to all of your office peripherals, but instead we had to wait for Thunderbolt to recreate those features but worse and more likely to drop out

I ran DVI for quite a while until my friend’s BenQ was weirdly green over HDMI and no amount of monitor menu would fix it. So we traded cords and I never went back to DVI. I ran DisplayPort for a while when I got my 2080ti, but for some reason the proprietary Nvidia drivers (I think around v540) on Linux would cause weird diagonal lines across my monitor while on certain colors/windows.

However, the previous version drivers didn’t do this, so I downgraded the driver on Pop!_OS which was easy because it keeps both the newest and previous drivers on hand. I distrohopped to a distro that didn’t have an easy way to rollback drivers, so my friend suggested HDMI and it worked.

I do miss my HDMI to DVI though. I was weirdly attached to that cord, but it’d probably just sit in my big box of computer parts that I may need… someday. I still have my 10+ VGA cords though!

Yeah it was a weird system just like today’s usb-c it could support different things.

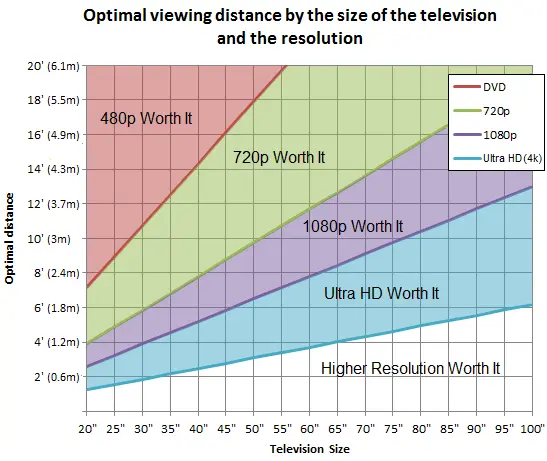

I mean, it could… but if you run the math on a 4k vs an 8k monitor, you’ll find that for most common monitor and tv sizes, and the distance you’re sitting from them…

It basically doesn’t actually make any literally perceptible difference.

Human eyes have … the equivalent of a maximum resolution, a maximum angular resolution.

You’d have to have literally superhuman vision to be able to notice a difference in almost all space scenarios that don’t involve you owning a penthouse or mansion, it really only makes sense if you literally have a TV the size of an entire wall of a studio apartment, or use it for like a Tokyo / Times Square style giant building wall advertisement, or completely replace projection theatres with gigantic active screens.

This doesn’t have 8k on it, but basically, buying an 8k monitor that you use at a desk is literally completely pointless unless your face is less than a foot away from it, and it only makes sense for like a TV in a living room if said TV is … like … 15+ feet wide, 7+ feet tall.

That graph is fascinating, thank you!

Yes. This. Resolution is already high enough for everything, expect maybe wearables (i.e. VR goggles).

HDMI 2.1 can already handle 8k 10-bit color at 60Hz and 2.2 can do 240Hz.

While it is pretty subtle, and colour depth and frame rate are easily way more important, I can easily tell the difference between an 8k and a 4k computer monitor from usual seating position. I mean it’s definitely not enough of a difference for me to bother upgrading my 2k monitor 😂, but it’s there. Maybe I have above average vision though, dunno though: I’ve never done an eye test.

Well, i have +1.2 with glasses (which is a lot) and i do not see a difference between FHD (1980) and 4k on a 15" laptop. What i did notice though, background was a space image, the stars got flatter while switching to FHD. My guess is, the Windows driver of Nvidia tweaks Gamma & light, to encourage buying 4k devices, because they needed external GPU back then. The colleague later reported that he switched to FHD, because the laptop got too hot 😅. Well, that was 5 years ago.

Interesting: in that sense 4k would have slightly better brightness gradiation: if you average the brightness of 4 pixels there’s a lot more different levels of brightness that can be represented by 4 pixels vs 1, which might explain the difference perceived even when you can’t see the individual pixels.

The maximum and minimum brightness would still be the same though, so wouldn’t really help with the contrast ratio, or black levels, which are the most important metrics in terms of image quality imo.

doesn’t this suggest that my 27" monitor I sit a foot away from should just be 480p? that seems a little noticable to me

I think you got this wrong. If you sit 0.3 m away, more than 4k is worth it.

you’re right lol. at first glance I thought the y axis was in inches not feet. make sense now lol

Btw, https://en.m.wikipedia.org/wiki/Angular_resolution

And already 4k tv would have to be, what, 2m diagonale, in usual viewing distance of 3m+.

The year is 2045. My grandson runs up to me with a handful of black cords

“Poppy, you know computers, right? I need to connect my Jongo 64k display, but it has CFONG-K6 port, and my Pimble box only has a Holoweave port, have you got an adapter?”

Sadly I sift through the strange cords without a shred of recognition. Truly my time on this mortal coil is coming to a wrap

Don’t worry, maybe they misjudged the size of the asteroid and 2032 is it.

Cool, I won’t have to update my date formats.

The meaning of high Res wasn’t changed though also VGA was able to output Full HD as well.

My old 19" CRT monitors could do QXGA [2048x1536] (or maybe even QSXGA [2560x2048], though I think I skipped that setting because it made the text too small in an era before DPI-independent GUIs) through their VGA connections, which is more than “Full HD.”

When I switched to flat-panel displays close to two decades ago it was a downgrade in resolution, which I only made up for less than a year ago when I finally upgraded my 1080p LCDs to 1440p ones.

But no sound, that was one of the advantages of hdmi over vga

Wait, how is losing my independent speakers and having an audio setup not instantly compatible with my CD player, VCR, and my Victrola an upgrade?

Your classic VGA setup will probably be connected to a CRT monitor, which among other things has zero lag, and therefore running your sound separately to your audio setup, which also has zero lag, will be fine. Audio and video are in sync.

HDMI cables will almost certainly be connected to a flatscreen of some kind. Monitors tend to have fairly low lag, but flatscreen TVs can be crazy. Some of them have “game” mode (or similar) but as for the rest, they might have half-a-second or more of image processing before actually displaying anything. Running sound separately will have a noticeable disconnect between audio and video; drives me crazy although some people don’t notice it. You would connect your audio setup to the TV rather than directly to source to correct this.

Now, the fact that a lot of cheap TVs only have a 3.5mm headphone jack to “send on the sound” is annoying to me, too. A lot of people just don’t care about how things sound and therefore it’s not a commercial priority. Optical digital audio output would be ideal, in that cheap audio circuitry inside the television won’t degrade the sound being passed over HDMI and you can use your own choice of DAC, but they can be both expensive and add a bit of lag as well.

Why would you lose that? It has the ability to carry sound but it doesn’t need to.

VGA was just analog, it wasn’t because the resolutions supported weren’t HD.

100% right. I know it can handle 1920x1080 @ 60hz and it can handle up to 2048x1536

It can handle way more than that can’t it? It’s analogue, I remember being able to set my crt to 120hz way back in the day.

I’ve seen some videos on YouTube of people overclocking their CRTs so definitely that most be a thing. And yes it is 100% analog.

I should get a new CRT.

Dude, I’ve been thinking about that too and they’re getting rare or difficult to find, at least in the USA. I found one at work with the Windows 2000 Login Screen burned in…

I remember my buddy getting a whole bunch of viewsonic CRTs from his dad who worked at a professional animation studio. They could do up to 2048×1536 and they looked amazing, but were heavy as fuck for lan parties lol. I loved that monitor though, when i finally ‘upgraded’ to an lcd screen it felt like a downgrade in alot of ways except desk real estate.

ViewSonics were the BEST

Trinitron was better because it was flat.

…ish

Sony just used to make good quality electronics, in general.

I don’t think you’ll need much more than 8k 144hz. Your eyes just can’t see that much. Maybe the connector will eventually get smaller, such as USBC?

640k of RAM should be enough for anybody

You think that’s a clever analogy but it’s not even close.

Well, I don’t know much about the resolving power and maximum refresh rate of human vision but I’m guessing that the monitor they described is close to the limit.

The analogy refers to someone who has their thinking constrained to the current situation. They didn’t imagine that computers would become resource-intensive multimedia machines, just as this person suggests the cable wouldn’t be asked to carry more data than would be necessary for the 8k monitor.

I can imagine a scenario with dual high resolution screens, cameras and location tracking data passing through a single cable for something like a future VR headset. This may end up needing quite a bit more data throughput than the single monitor–and that isn’t even thinking outside the box. That’s still the current use case.

Do you have a crystal ball over there? I still think it’s a clever analogy.

Well then maybe you should read the comment you replied to again? They did not talk about how much data the cable would need. They even hypothesized that the cable format might even change. The meme talks about defining hd and they commented that 8k would be enough. Human eyes will not magically get more resolving so yeah, your analogy is still bad.

I do disagree on the Hz though. It would indeed be nice if we got 8k@360hz at some point in the future but that’s not resolution so I’ll let it slide.

Fair enough.

Just be sure to keep lubricated while you permit all that sliding.

laughs in bnc

Supposedly 0-4Ghz passband and can carry 500v. No idea what that translates to in terms of resolution/framerate, only that it’s A Lot. Biggest downside is that it’s analog.

Edit: for comparison, iirc my CRT monitor runs somewhere around 20~30Khz for a max of 1280x1024@75hz. I may be comparing apples to oranges here (I’m still learning about analog connectors, how analog video works, etc), buuuuut that suggests that a bnc connect running at its highest rated output would potentially be able to run some fairly large displays.

Nah, not only analog.

SDI - with 24G SDI being the latest standard, able carry up to 24gbps - is alive and well.

I hope HDMI dies off in favour of DisplayPort. We need fewer proprietary standards in the world.